Aug 2, 2023

Apache Avro Decoding Story: Turning a Data Corruption Bug Into a Feature

Tackling complex data challenges to bring realtime streaming data to Web3

Principal Software Engineer

Apache Avro is a robust data serialization framework that’s frequently used with data streaming systems like Apache Kafka and Redpanda. We use it in Goldsky as a storage format for all our topics.

Avro schemas are stored in a Schema Registry and, most of the time, don’t require any extra attention: a message producer publishes a message and, optionally, registers a schema (if it’s new). A message consumer reads the message and, optionally, fetches the schema from the Schema Registry.

But what if you forget to upload the right schema? Different client libraries provide different guarantees, and we learned the hard way that it can be very easy to accidentally publish a message with an incorrect schema. However, this is a story with a happy end, so we were able to turn the bug into a feature by tweaking the way Avro decoders work!

The Goldsky Mirror Platform

Mirror is our flagship product, which we use to stream onchain data from various blockchains directly into customers’ existing database infrastructure. The idea behind Mirror is to make it much easier for developers to work with event logs, transactions, and traces emitted from the blockchain, streamlining builds for complex (and essential) data pipelines.

Mirror consists of a few key components:

- Redpanda — We use Redpanda as a streaming platform. Redpanda also provides a Schema Registry for storing all Avro message schemas. Redpanda Console is a UI for browsing and managing topics, schemas and connectors.

- Producers — Many producers emit Avro messages to Redpanda. We have Python, Golang and Java clients.

- Consumers — Many consumers read Avro messages from Redpanda. Most of our consumers are Apache Flink applications using Java client.

The Incident

One sunny day in June, some of our Flink applications started to fail with various deserialization exceptions like:

Caused by: java.lang.ArrayIndexOutOfBoundsException: Index -28 out of bounds for length 2

at org.apache.avro.io.parsing.Symbol$Alternative.getSymbol(Symbol.java:460)

at org.apache.avro.io.ResolvingDecoder.readIndex(ResolvingDecoder.java:283)

at org.apache.avro.generic.GenericDatumReader.readWithoutConversion(GenericDatumReader.java:187)

and

java.lang.OutOfMemoryError: Java heap space

at java.base/java.util.Arrays.copyOf(Unknown Source)

at org.apache.avro.util.Utf8.setByteLength(Utf8.java:122)

at org.apache.avro.io.BinaryDecoder.readString(BinaryDecoder.java:288)

at org.apache.avro.io.ResolvingDecoder.readString(ResolvingDecoder.java:208)

We immediately started debugging this issue and were able to reproduce it locally. The discovery we made was surprising at the beginning: no matter what recent message we started consuming from, the first message would always be decoded successfully, but the second would always fail. We tried different topics, partitions and offsets. Sometimes the exceptions changed, but all came from the Avro decoder.

The OutOfMemoryError was especially puzzling: all records in affected topics were fairly small. After some debugging (by the way, do you know you can catch OutOfMemoryErrors?), we realized that the Avro decoder tried to read the string and allocate a few gigabytes of memory for that! 🤯

Another equally puzzling thing: Redpanda Console, which we use for browsing topics, didn’t experience any issues.

The Avro Spec and Why Schemas Are So Important

Apache Avro supports two types of encodings: binary and JSON. In a production environment, binary encoding is the most popular one - it’s very efficient. Here’s the quote from the documentation:

Binary encoding does not include field names, self-contained information about the types of individual bytes, nor field or record separators. Therefore readers are wholly reliant on the schema used when the data was encoded.

So this means that the Avro payload just contains a bunch of byte values that represent different values together, and you need a schema to be used as a map to navigate between them. Now let’s see how strings specifically are encoded:

a string is encoded as a long followed by that many bytes of UTF-8 encoded character data. For example, the three-character string “foo” would be encoded as the long value 3 (encoded as hex 06) followed by the UTF-8 encoding of ‘f’, ‘o’, and ‘o’ (the hex bytes 66 6f 6f)

It seems that, somehow, when reading the payload, the Avro decoder used an incorrect value for the string length, which caused OutOfMemoryError.. but why? And why doesn’t it affect Redpanda Console?

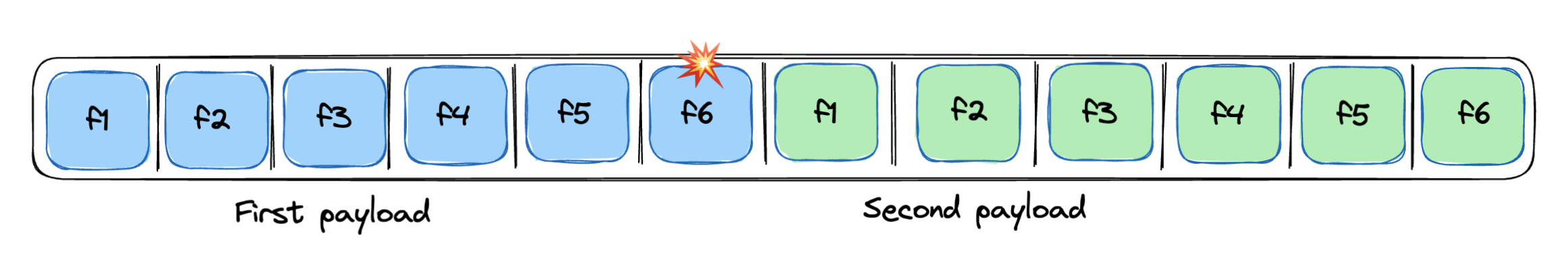

At this time, we also found the producer application emitting the data, which was a Golang application. A suspicious commit introduced a new payload field, but after some investigation, we realized that the updated schema was never uploaded to the Schema Registry 😱. So all recent payloads had an additional set of bytes representing the value of the new field.

After that, org.apache.avro.io.BinaryDecoder from the Java client became our main suspect. Here’s what the documentation says:

This class may read-ahead and buffer bytes from the source beyond what is required to serve its read methods.

And indeed, it has an internal buffer (byte[] buf) that can be reused for decoding different payloads.

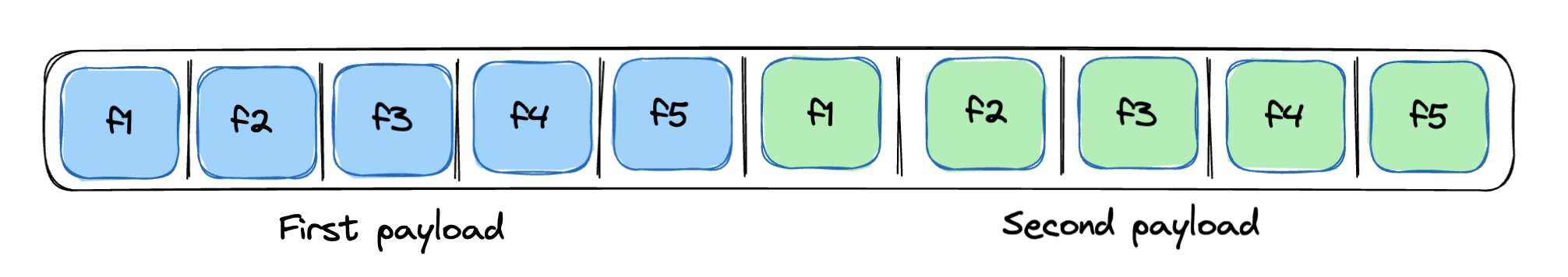

So, to summarize, this is what the decoder expects based on the schema:

Each field consists of a certain number of bytes dictated by the schema. But instead, this is what happens:

It assumes it’s reading the second payload, but it’s actually still reading the first!

Different Ways to Decode

Is there anything we can do to fix decoding without reprocessing a lot of data? And why didn’t it affect Redpanda Console, which uses a decoding library written in Golang (hamba/avro)?

It turns out that using a shared buffer is not really necessary. It may result in some performance improvements, but it looks like it’s not how the hamba/avro is implemented, for example. And even Avro implementation in Java comes with a different decoder called DirectBinaryDecoder, a non-buffering version of BinaryDecoder.

After switching to DirectBinaryDecoder, we no longer saw any deserialization issues! 🎉 The payloads are still, technically, incorrect (they don’t match the schema), but we’re able to read them just fine. It was only possible because we added a new field at the very end of the payload, so it doesn’t affect other fields and can be safely ignored. If we were to put the new field in the middle, it’d result in the same issue since the order of fields would be violated.

Lessons Learned

When using Avro:

- Never update message schemas manually! Have an automated way as a part of the CI/CD process, or at least make sure to register new schemas in runtime.

- Always add new fields at the end of the payload 😉.

- Study how your serializers and deserializers work! You might be surprised to find ways to control their behavior.

Get Started with Goldsky

To start building onchain data pipelines of your own, visit goldsky.com to create an account. We handle the heavy engineering lift, making it easy to build enterprise-grade data workflows to build powerful applications faster. With full-featured indexing services, subgraph hosting, and streaming capabilities, you can rest easy knowing your data stack is in good hands.

To keep up with the latest Goldsky news and updates, be sure to give us a follow on Twitter.